Processing massive amounts of data in real time for demanding applications – such as big data analytics, cloud transactions and IoT – continues to be a challenge for the IT industry. High speed networks at 25, 50, and 100 Gb/s, combined with the rise of faster SSDs and new non-volatile memory (NVM) technologies have addressed performance bottlenecks at the I/O level but have also resulted in increased congestion for the CPU. These CPU limitations are pushing IT managers to adopt new approaches in enterprise data center designs that were once only beneficial to the High Performance Computing (HPC) community.

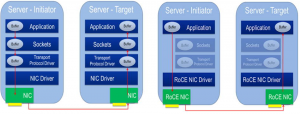

Remote Direct Memory Access (RDMA) and its Ethernet implementation, called RDMA over Converged Ethernet (RoCE), provides a seamless, low overhead, scalable way to solve the CPU bottleneck with minimal changes to existing infrastructure.

A new RoCE Initiative whitepaper, titled RoCE Accelerates Data Center Performance, Cost Efficiency, and Scalability, explains the many benefits of deploying RoCE and how it can address the ever-changing needs of next generation data centers. The document features recent case studies and outlines the following RoCE advantages:

- Freeing the CPU from Performing Storage Access

- Altering Design Optimization

- Future-Proofing the Data Center

- Presenting New Opportunities for the Changing Data Center

With these benefits, RoCE is the obvious choice for improving Ethernet compute performance while ensuring efficient and inexpensive scalability. To learn more about RoCE’s impact on the evolving data center, download the whitepaper from the RoCE Initiative’s Resource Library today: http://www.roceinitiative.org/resources/